How To Become A Data Engineer

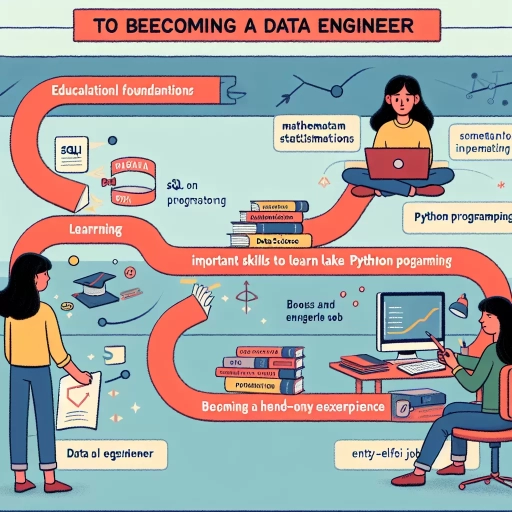

The field of data engineering is rapidly growing, and the demand for skilled data engineers is on the rise. As a result, many individuals are looking to transition into this exciting and rewarding career. However, becoming a successful data engineer requires a combination of technical skills, knowledge, and experience. To get started, it's essential to acquire the necessary skills and knowledge in data engineering, including programming languages, data storage systems, and data processing tools. Building a strong foundation in data engineering is also crucial, which involves understanding data architecture, data modeling, and data governance. Furthermore, staying up-to-date with industry trends and best practices is vital to remain competitive in the field. By focusing on these key areas, individuals can set themselves up for success and thrive in the world of data engineering. In this article, we will explore the first step in becoming a data engineer: acquiring the necessary skills and knowledge.

Acquiring the Necessary Skills and Knowledge

Acquiring the necessary skills and knowledge is crucial for success in the field of data science. To excel in this field, one must possess a combination of technical skills, business acumen, and soft skills. Three essential areas of focus for aspiring data scientists include learning programming languages, understanding data storage solutions, and familiarizing themselves with data processing frameworks. By mastering these skills, individuals can effectively collect, analyze, and interpret complex data, driving business growth and informed decision-making. To begin this journey, it is essential to start with the fundamentals, and that means learning programming languages such as Python, Java, and Scala.

Learning Programming Languages such as Python, Java, and Scala

Acquiring the necessary skills and knowledge is a crucial step in becoming a data engineer. Learning programming languages such as Python, Java, and Scala is essential for data engineers, as they are widely used in the field. Python is a popular choice among data engineers due to its simplicity, flexibility, and extensive libraries, including NumPy, pandas, and scikit-learn, which make it ideal for data manipulation, analysis, and machine learning tasks. Java is another widely used language in data engineering, particularly for building large-scale data processing systems, such as Apache Hadoop and Apache Spark, which are used for processing and analyzing vast amounts of data. Scala, on the other hand, is a multi-paradigm language that is gaining popularity in the data engineering community, particularly for building data pipelines and data processing systems, due to its concise syntax, strong type system, and high-performance capabilities. By learning these programming languages, data engineers can develop the skills needed to design, build, and maintain large-scale data systems, and to work with various data processing frameworks and tools. Additionally, learning these languages can also help data engineers to develop problem-solving skills, critical thinking, and analytical skills, which are essential for working with complex data systems. Overall, acquiring the necessary skills and knowledge in programming languages such as Python, Java, and Scala is a critical step in becoming a successful data engineer.

Understanding Data Storage Solutions like Hadoop, Cassandra, and MongoDB

In the realm of data engineering, understanding data storage solutions is crucial for designing and implementing efficient data pipelines. Among the most popular data storage solutions are Hadoop, Cassandra, and MongoDB, each with its unique strengths and use cases. Hadoop, an open-source, distributed computing framework, is ideal for processing large datasets and performing batch processing tasks. Its Hadoop Distributed File System (HDFS) allows for scalable and fault-tolerant data storage, making it a popular choice for big data analytics. Cassandra, on the other hand, is a NoSQL database designed for handling high-velocity and high-volume data across many commodity servers. Its distributed architecture and tunable consistency model make it suitable for real-time web and mobile applications. MongoDB, another NoSQL database, is known for its flexible schema design and high performance, making it a popular choice for modern web and mobile applications. Understanding the strengths and weaknesses of these data storage solutions is essential for data engineers to design and implement efficient data pipelines that meet the specific needs of their organization. By acquiring knowledge of these solutions, data engineers can make informed decisions about data storage and processing, ultimately leading to better data management and analysis.

Familiarizing with Data Processing Frameworks like Apache Spark and Apache Beam

Familiarizing yourself with data processing frameworks like Apache Spark and Apache Beam is a crucial step in becoming a data engineer. These frameworks are designed to handle large-scale data processing and provide a robust set of tools for data ingestion, processing, and analysis. Apache Spark is a popular open-source data processing engine that provides high-level APIs in Java, Python, and Scala, making it easy to write efficient and scalable data processing applications. Apache Beam, on the other hand, is a unified data processing model that allows you to define data processing pipelines and execute them on various execution engines, including Spark, Flink, and Cloud Dataflow. By learning these frameworks, you'll be able to process and analyze large datasets, perform data transformations, and build data pipelines that can handle complex data workflows. Additionally, you'll gain hands-on experience with data processing concepts such as batch processing, stream processing, and data aggregation, which are essential skills for any data engineer. To get started, you can explore the official documentation and tutorials for Apache Spark and Apache Beam, and practice building data processing applications using these frameworks. You can also take online courses or attend workshops to deepen your understanding of these technologies and stay up-to-date with the latest developments in the field. By mastering data processing frameworks like Apache Spark and Apache Beam, you'll be well-equipped to handle the demands of a data engineer role and build scalable and efficient data processing systems.

Building a Strong Foundation in Data Engineering

Building a strong foundation in data engineering is crucial for any organization looking to leverage data-driven insights to drive business decisions. To achieve this, data engineers must possess a comprehensive understanding of various concepts and principles. This includes understanding data modeling and database design principles, learning data warehousing and ETL concepts, and mastering data security and governance best practices. By grasping these fundamental concepts, data engineers can design and implement efficient data systems that support business growth and innovation. In this article, we will delve into the importance of understanding data modeling and database design principles, and how it serves as the foundation for building a robust data engineering framework. By understanding how to design and implement databases that meet the needs of an organization, data engineers can ensure that their data systems are scalable, reliable, and efficient.

Understanding Data Modeling and Database Design Principles

Understanding data modeling and database design principles is a crucial aspect of building a strong foundation in data engineering. Data modeling involves creating a conceptual representation of the data, including entities, attributes, and relationships, to help organize and structure the data in a way that is easy to understand and analyze. This process involves identifying the key entities, such as customers, orders, and products, and defining their attributes, such as names, addresses, and prices. Data modeling also involves establishing relationships between entities, such as a customer placing an order or a product being part of an order. By creating a clear and concise data model, data engineers can ensure that the data is consistent, accurate, and scalable. Database design principles, on the other hand, focus on the physical implementation of the data model, including the selection of a database management system, data storage, and data retrieval mechanisms. A well-designed database should be able to handle large volumes of data, support high-performance queries, and ensure data integrity and security. By applying data modeling and database design principles, data engineers can create a robust and efficient data infrastructure that supports business intelligence, data analytics, and data science applications. Effective data modeling and database design also enable data engineers to identify data quality issues, optimize data storage and retrieval, and ensure compliance with regulatory requirements. By mastering data modeling and database design principles, data engineers can build a strong foundation for their careers and contribute to the success of their organizations.

Learning Data Warehousing and ETL Concepts

Here is the paragraphy: Learning data warehousing and ETL (Extract, Transform, Load) concepts is a crucial step in building a strong foundation in data engineering. A data warehouse is a centralized repository that stores data from various sources in a single location, making it easier to access and analyze. ETL is the process of extracting data from multiple sources, transforming it into a standardized format, and loading it into the data warehouse. Understanding data warehousing and ETL concepts enables data engineers to design and implement efficient data pipelines, ensuring that data is accurate, complete, and readily available for analysis. Key concepts to learn include data modeling, data governance, data quality, and data security. Additionally, familiarizing oneself with ETL tools such as Informatica, Talend, and Microsoft SQL Server Integration Services (SSIS) can help data engineers to efficiently manage and process large datasets. By mastering data warehousing and ETL concepts, data engineers can unlock the full potential of their organization's data, driving business growth and informed decision-making.

Mastering Data Security and Governance Best Practices

Mastering data security and governance best practices is a crucial aspect of data engineering, as it ensures the confidentiality, integrity, and availability of sensitive data. To achieve this, data engineers must implement robust security measures, such as encryption, access controls, and authentication protocols, to protect data both in transit and at rest. Additionally, they must establish clear data governance policies and procedures, including data classification, data masking, and data retention, to ensure compliance with regulatory requirements and industry standards. Furthermore, data engineers should conduct regular security audits and risk assessments to identify vulnerabilities and implement remediation measures to mitigate potential threats. By mastering data security and governance best practices, data engineers can build trust with stakeholders, ensure the integrity of data-driven decision-making, and maintain a competitive edge in the market. Effective data security and governance also enable data engineers to respond quickly to emerging threats and changing regulatory requirements, ensuring the long-term sustainability of their data engineering efforts. By prioritizing data security and governance, data engineers can create a strong foundation for their data engineering practice, enabling them to drive business growth, improve operational efficiency, and deliver high-quality data products and services.

Staying Up-to-Date with Industry Trends and Best Practices

Staying up-to-date with industry trends and best practices is crucial for professionals to remain competitive and deliver high-quality work. In today's fast-paced and ever-evolving work environment, it's essential to be aware of the latest developments and advancements in your field. One way to achieve this is by participating in online communities and forums, such as Reddit and Stack Overflow, where you can engage with peers, ask questions, and learn from others. Additionally, attending conferences and meetups provides opportunities to network with professionals and gain insights from industry experts. Reading industry blogs and books is also an effective way to stay current with emerging technologies and trends. By incorporating these strategies into your routine, you can ensure that you're always informed and equipped to tackle new challenges. For instance, let's start with the benefits of participating in online communities and forums like Reddit and Stack Overflow.

Participating in Online Communities and Forums like Reddit and Stack Overflow

Participating in online communities and forums is an essential aspect of staying up-to-date with industry trends and best practices in data engineering. Platforms like Reddit's r/dataengineering and r/bigdata, as well as Stack Overflow, provide a wealth of information and opportunities for collaboration and knowledge-sharing. By engaging with these communities, data engineers can stay informed about the latest tools, technologies, and methodologies, and gain valuable insights from experienced professionals. For instance, Reddit's community-driven approach allows users to share their experiences, ask questions, and receive feedback from peers, while Stack Overflow's Q&A format enables users to find solutions to specific problems and learn from others' expertise. Moreover, participating in online forums can help data engineers develop their critical thinking and problem-solving skills, as they learn to analyze complex issues and provide effective solutions. By actively contributing to these communities, data engineers can also establish themselves as thought leaders and build their professional network, ultimately enhancing their career prospects and staying ahead of the curve in the rapidly evolving field of data engineering.

Attending Conferences and Meetups to Network with Professionals

Attending conferences and meetups is an excellent way to network with professionals in the data engineering field. These events provide a platform to meet and interact with experienced data engineers, learn about new technologies and trends, and gain insights into the industry. By attending conferences and meetups, you can expand your professional network, build relationships with potential employers or collaborators, and stay updated on the latest developments in the field. Additionally, many conferences and meetups offer workshops, tutorials, and hands-on training sessions, which can help you improve your skills and knowledge. Some popular conferences and meetups for data engineers include the Data Engineering Summit, the Big Data Conference, and the Data Science Meetup. You can also search for local meetups and events in your area, which can be a great way to connect with other professionals who share similar interests. Overall, attending conferences and meetups is a valuable way to network with professionals, learn about new technologies and trends, and advance your career as a data engineer.

Reading Industry Blogs and Books to Stay Current with Emerging Technologies

Staying current with emerging technologies is crucial for data engineers to remain competitive in the industry. One effective way to achieve this is by reading industry blogs and books. Industry blogs provide up-to-date information on the latest trends, tools, and techniques in data engineering. They offer insights from experienced professionals and thought leaders, helping data engineers stay informed about new technologies and methodologies. Books, on the other hand, provide in-depth knowledge and a comprehensive understanding of specific topics. They offer a detailed analysis of complex concepts, making them an invaluable resource for data engineers looking to deepen their knowledge. By reading industry blogs and books, data engineers can gain a competitive edge, improve their skills, and stay ahead of the curve in the rapidly evolving field of data engineering. Regularly reading industry blogs and books can also help data engineers develop a habit of continuous learning, which is essential for success in the field. Moreover, it can help them identify areas where they need to improve and provide a roadmap for professional development. Overall, reading industry blogs and books is an essential part of a data engineer's professional development, enabling them to stay current with emerging technologies and advance their careers.