How Do Transformers Work

Transformers have revolutionized the field of natural language processing (NLP) and have become a crucial component in various applications, including language translation, text summarization, and chatbots. But have you ever wondered how these complex models work? In this article, we will delve into the inner workings of transformers, exploring their architecture, applications, and advancements. To understand how transformers work, it's essential to start with the basics. We will begin by understanding the fundamental concepts of transformers, including self-attention mechanisms and encoder-decoder structures. From there, we will dive deeper into the architecture of transformers, examining the different components that make up these models. Finally, we will explore the various applications and advancements of transformers, including their use in computer vision and multimodal learning. By the end of this article, you will have a comprehensive understanding of how transformers work and their potential to transform the field of AI. Understanding the Basics of Transformers is the first step in this journey, and it's where we will begin.

Understanding the Basics of Transformers

Transformers have revolutionized the field of natural language processing (NLP) and have become a crucial component in many state-of-the-art models. At their core, transformers rely on a unique architecture that enables them to handle sequential data in a more efficient and effective way than traditional neural networks. To understand the basics of transformers, it's essential to grasp the fundamental concepts that make them tick. This includes understanding what a transformer is and its key components, how it differs from traditional neural networks, and the role of the self-attention mechanism in its architecture. By exploring these concepts, we can gain a deeper understanding of how transformers work and why they have become so successful in NLP tasks. In this article, we will delve into the world of transformers, starting with the basics of what a transformer is and its key components.

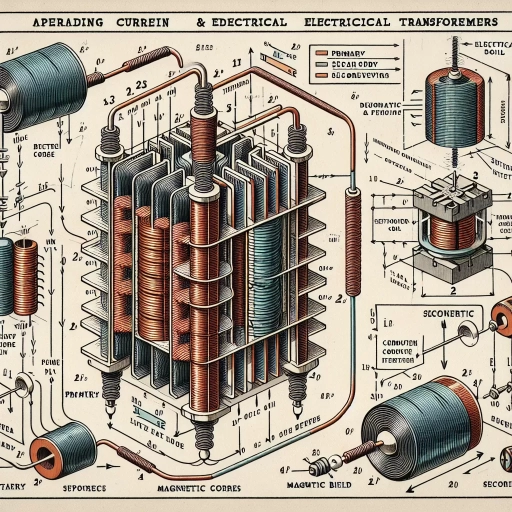

What is a Transformer and its Key Components

A transformer is an electrical device that transfers electrical energy from one circuit to another through electromagnetic induction. It consists of two or more coils of wire, known as the primary and secondary coils, which are wrapped around a common magnetic core. The primary coil receives an alternating current (AC) input, which generates a magnetic field that induces a voltage in the secondary coil. The secondary coil then produces an output voltage that is proportional to the input voltage, but with a different amplitude and frequency. The key components of a transformer include the primary and secondary coils, the magnetic core, and the insulation between the coils. The primary coil is responsible for receiving the input voltage and generating the magnetic field, while the secondary coil produces the output voltage. The magnetic core serves as a medium for the magnetic field to flow through, and the insulation prevents electrical shock and ensures safe operation. Transformers are commonly used in power distribution systems to step up or step down voltages, allowing for efficient transmission and utilization of electrical energy.

How Transformers Differ from Traditional Neural Networks

Transformers differ from traditional neural networks in several key ways. Firstly, transformers do not rely on recurrent neural networks (RNNs) or convolutional neural networks (CNNs) to process sequential data. Instead, they use self-attention mechanisms to weigh the importance of different input elements relative to each other. This allows transformers to handle long-range dependencies and contextual relationships in data more effectively. Additionally, transformers are designed to process input sequences in parallel, making them much faster than RNNs and CNNs, which process sequences sequentially. Furthermore, transformers use multi-head attention, which enables them to jointly attend to information from different representation subspaces at different positions. This allows transformers to capture complex patterns and relationships in data that may not be apparent through single-head attention. Overall, the transformer architecture is particularly well-suited for natural language processing tasks, such as machine translation, text summarization, and question answering, where understanding the relationships between different parts of the input data is crucial.

The Role of Self-Attention Mechanism in Transformers

The self-attention mechanism is a crucial component of the Transformer architecture, allowing the model to weigh the importance of different input elements relative to each other. This mechanism is based on the idea that the model should be able to attend to different parts of the input sequence simultaneously and weigh their importance. The self-attention mechanism is implemented using three types of vectors: Query (Q), Key (K), and Value (V). The Query vector represents the input element being processed, the Key vector represents the input element being compared to, and the Value vector represents the input element being weighted. The self-attention mechanism calculates the attention weights by taking the dot product of the Query and Key vectors and applying a softmax function. The attention weights are then used to compute a weighted sum of the Value vectors, resulting in a vector that represents the input element being processed. This process is repeated for each input element, allowing the model to capture complex relationships between different parts of the input sequence. The self-attention mechanism is particularly useful for tasks such as machine translation, where the model needs to capture long-range dependencies between different parts of the input sequence. By allowing the model to attend to different parts of the input sequence simultaneously, the self-attention mechanism enables the model to capture these dependencies more effectively. Overall, the self-attention mechanism is a key component of the Transformer architecture, enabling the model to capture complex relationships between different parts of the input sequence and achieve state-of-the-art results in a variety of natural language processing tasks.

Delving into the Architecture of Transformers

The Transformer architecture has revolutionized the field of natural language processing (NLP) and has been widely adopted in various applications. At its core, the Transformer architecture relies on three key components: the encoder-decoder structure, multi-head attention, and positional encoding. The encoder-decoder structure is the backbone of the Transformer, responsible for processing input sequences and generating output sequences. Multi-head attention is a crucial mechanism that enables the model to focus on different aspects of the input data, leading to improved performance. Positional encoding is another vital component that allows the model to capture the sequential nature of the input data. In this article, we will delve into the architecture of Transformers, exploring each of these components in detail. We will begin by examining the encoder-decoder structure and its functionality, which provides the foundation for the entire Transformer architecture.

The Encoder-Decoder Structure and its Functionality

The encoder-decoder structure is a fundamental component of the Transformer architecture, enabling the model to effectively process and generate sequential data. The encoder takes in a sequence of tokens, such as words or characters, and outputs a continuous representation of the input sequence. This representation is then passed to the decoder, which generates the output sequence, one token at a time. The encoder and decoder are composed of identical layers, each consisting of two sub-layers: a self-attention mechanism and a feed-forward neural network. The self-attention mechanism allows the model to weigh the importance of different input elements relative to each other, while the feed-forward network transforms the output of the self-attention mechanism. The encoder and decoder also use positional encoding to preserve the order of the input sequence. The encoder-decoder structure is particularly well-suited for sequence-to-sequence tasks, such as machine translation, text summarization, and chatbots, where the input and output sequences are of different lengths. By using the encoder-decoder structure, the Transformer model can effectively capture long-range dependencies and contextual relationships in the input data, generating coherent and accurate output sequences.

Multi-Head Attention and its Impact on Performance

The introduction of multi-head attention in transformer models has revolutionized the field of natural language processing, significantly boosting performance in various tasks such as machine translation, text classification, and language modeling. By allowing the model to jointly attend to information from different representation subspaces at different positions, multi-head attention enables the capture of complex contextual relationships and nuanced dependencies within input sequences. This is achieved through the use of multiple attention heads, each of which computes a weighted sum of the input elements based on their relevance to the current input element, with the weights being learned during training. The outputs from each attention head are then concatenated and linearly transformed to produce the final output. The use of multiple attention heads allows the model to capture different aspects of the input data, such as syntax, semantics, and pragmatics, and to weigh their importance dynamically based on the context. This leads to improved performance, as the model can selectively focus on the most relevant information and ignore irrelevant details. Furthermore, multi-head attention enables parallelization, allowing for faster computation and making it more suitable for large-scale applications. Overall, the introduction of multi-head attention has been a key factor in the success of transformer models, enabling them to achieve state-of-the-art results in a wide range of NLP tasks.

Positional Encoding and its Importance in Transformers

Positional encoding is a crucial component in the transformer architecture, allowing the model to capture sequential information and understand the context of input data. In traditional recurrent neural networks (RNNs), the sequential nature of data is inherently preserved through the recurrence mechanism. However, transformers rely on self-attention mechanisms, which process input sequences in parallel, making it challenging to preserve sequential information. To address this issue, positional encoding is used to inject positional information into the input embeddings, enabling the model to distinguish between different positions in the sequence. This is particularly important in natural language processing tasks, where the order of words in a sentence significantly affects its meaning. By adding positional encoding to the input embeddings, transformers can effectively capture the sequential relationships between input elements, leading to improved performance in tasks such as language translation, text classification, and sentiment analysis. Furthermore, positional encoding also enables transformers to handle variable-length input sequences, making them more flexible and adaptable to different applications. Overall, positional encoding plays a vital role in the transformer architecture, allowing the model to effectively process sequential data and capture contextual information, which is essential for achieving state-of-the-art results in various NLP tasks.

Applications and Advancements of Transformers

Transformers have revolutionized the field of artificial intelligence, particularly in the areas of natural language processing, computer vision, and image processing. The applications and advancements of transformers have been vast and varied, with significant impacts on various industries and domains. In this article, we will explore the applications and advancements of transformers in three key areas: natural language processing tasks, computer vision and image processing, and future directions and potential applications. We will examine how transformers have improved the performance of NLP tasks, such as language translation, text summarization, and sentiment analysis. We will also discuss the applications of transformers in computer vision and image processing, including image classification, object detection, and image generation. Finally, we will look at the future directions and potential applications of transformers, including their potential use in areas such as healthcare, finance, and education. First, we will explore the applications of transformers in natural language processing tasks.

Transformers in Natural Language Processing (NLP) Tasks

Transformers have revolutionized the field of Natural Language Processing (NLP) by providing a novel approach to handling sequential data. Unlike traditional Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks, which process input sequences one step at a time, Transformers process the entire input sequence simultaneously. This parallelization enables Transformers to capture long-range dependencies and contextual relationships more effectively, leading to state-of-the-art results in various NLP tasks. In machine translation, for instance, Transformers have achieved remarkable performance, outperforming traditional sequence-to-sequence models. Similarly, in text classification, sentiment analysis, and question answering, Transformers have demonstrated superior performance, thanks to their ability to capture nuanced contextual relationships and subtle linguistic patterns. Furthermore, Transformers have also been successfully applied to tasks such as language modeling, text generation, and dialogue systems, showcasing their versatility and potential for a wide range of NLP applications. Overall, the Transformer architecture has become a cornerstone of modern NLP, enabling researchers and practitioners to tackle complex language understanding tasks with unprecedented accuracy and efficiency.

Transformers in Computer Vision and Image Processing

Transformers have revolutionized the field of computer vision and image processing, offering a novel approach to handling sequential data. In traditional computer vision, convolutional neural networks (CNNs) were the go-to architecture for image processing tasks. However, CNNs have limitations, such as being unable to effectively capture long-range dependencies and being computationally expensive. Transformers, on the other hand, are designed to handle sequential data and have been shown to be highly effective in computer vision tasks. They work by using self-attention mechanisms to weigh the importance of different parts of the input data, allowing the model to focus on the most relevant features. This has led to state-of-the-art results in various computer vision tasks, including image classification, object detection, and image segmentation. Furthermore, transformers have also been used in image generation tasks, such as image-to-image translation and image synthesis, achieving impressive results. The use of transformers in computer vision has also led to the development of new architectures, such as the Vision Transformer (ViT), which has achieved state-of-the-art results in image classification tasks. Overall, the application of transformers in computer vision and image processing has opened up new avenues for research and has the potential to revolutionize the field.

Future Directions and Potential Applications of Transformers

The future of transformers holds immense potential, with ongoing research and development aimed at expanding their capabilities and applications. One promising direction is the integration of transformers with other AI models, such as computer vision and reinforcement learning, to create more comprehensive and powerful systems. This could lead to breakthroughs in areas like multimodal processing, where transformers can learn to process and generate multiple types of data, such as text, images, and audio, simultaneously. Another area of exploration is the application of transformers in real-world domains, such as natural language processing for low-resource languages, sentiment analysis, and text summarization. Additionally, transformers are being explored for their potential in areas like machine translation, question-answering, and dialogue systems. Furthermore, researchers are also investigating the use of transformers in areas like time-series forecasting, recommender systems, and anomaly detection. The potential applications of transformers are vast and varied, and as research continues to advance, we can expect to see even more innovative and impactful uses of this technology.