What Is A State Function

In the realm of thermodynamics and physical chemistry, understanding the concept of state functions is crucial for grasping how systems evolve and interact. State functions are properties that depend solely on the current state of a system, independent of the path taken to reach that state. This fundamental concept underpins many scientific and engineering applications, making it essential to delve into its definition, characteristics, and practical examples. This article will explore the definition and concept of state functions, elucidating what they are and how they differ from path-dependent properties. It will also examine the characteristics and properties that define state functions, highlighting their unique attributes and implications. Finally, it will provide examples and applications of state functions, illustrating their significance in various fields. By understanding these aspects, readers will gain a comprehensive insight into the role and importance of state functions in scientific inquiry and real-world applications. Let us begin by defining and conceptualizing state functions, laying the groundwork for a deeper exploration of their characteristics and practical uses.

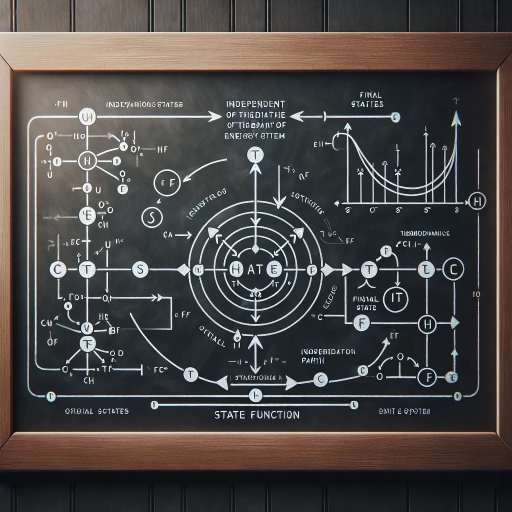

Definition and Concept of State Functions

State functions are fundamental concepts in thermodynamics, providing a robust framework for understanding and analyzing various physical and chemical processes. These functions are defined as properties whose values depend only on the current state of a system, independent of the path taken to reach that state. The concept of state functions is crucial because it allows for the precise quantification of system properties, enabling scientists to predict and explain a wide range of phenomena. In this article, we will delve into the definition and concept of state functions through three key perspectives: **Mathematical Representation**, **Physical Properties**, and **Thermodynamic Context**. First, we will explore the **Mathematical Representation** of state functions, which involves understanding how these functions are expressed and manipulated using mathematical tools. This will provide a solid foundation for comprehending their behavior and application. By examining the mathematical underpinnings, we can better appreciate the intrinsic nature of state functions. Transitioning seamlessly into the **Mathematical Representation** section, we will discuss how state functions are mathematically defined and how they relate to other thermodynamic variables. This will set the stage for a deeper exploration of their physical implications and thermodynamic significance, ensuring a comprehensive understanding of these vital concepts.

Mathematical Representation

In the realm of thermodynamics, the concept of state functions is fundamentally tied to mathematical representation, which provides a precise and quantifiable way to describe the properties of a system. State functions, such as internal energy, enthalpy, and entropy, are defined by their ability to be expressed as a function of the system's current state, independent of the path taken to reach that state. Mathematically, this means that these functions can be represented using partial derivatives and integrals that relate the changes in the system's properties to the variables defining its state. For instance, the internal energy \(U\) of a system can be expressed as a function of its temperature \(T\), volume \(V\), and the number of moles \(n\) of each component. This relationship is often represented in differential form: \(dU = \left(\frac{\partial U}{\partial T}\right)_V dT + \left(\frac{\partial U}{\partial V}\right)_T dV\). Here, the partial derivatives encapsulate how internal energy changes with respect to temperature and volume, respectively. Similarly, enthalpy \(H\), defined as \(H = U + PV\), where \(P\) is pressure, can be mathematically represented to show its dependence on temperature and pressure: \(dH = \left(\frac{\partial H}{\partial T}\right)_P dT + \left(\frac{\partial H}{\partial P}\right)_T dP\). The mathematical representation of state functions also leverages the concept of exact differentials, which are crucial for understanding why these functions are path-independent. An exact differential is one that can be expressed as the differential of a function, meaning it satisfies certain mathematical criteria such as being integrable and having a unique value regardless of the path taken. For example, the differential form of entropy change \(dS\) can be written as \(dS = \frac{dQ}{T}\), where \(dQ\) is the heat added to the system at temperature \(T\). This expression highlights that entropy change is an exact differential because it depends only on the initial and final states of the system. Moreover, mathematical tools like Maxwell relations further solidify the connection between state functions and their mathematical representations. These relations derive from the equality of mixed partial derivatives and provide a way to relate different state functions. For example, the Maxwell relation \(\left(\frac{\partial S}{\partial V}\right)_T = \left(\frac{\partial P}{\partial T}\right)_V\) connects entropy, volume, pressure, and temperature in a way that underscores their interdependence within the framework of thermodynamic state functions. In summary, the mathematical representation of state functions is a cornerstone of thermodynamics, enabling precise and quantitative descriptions of system properties. Through differential forms, partial derivatives, exact differentials, and Maxwell relations, these representations not only define how state functions behave but also why they are inherently path-independent—a fundamental characteristic that distinguishes them from other thermodynamic quantities. This rigorous mathematical framework ensures that state functions remain a powerful tool for analyzing and predicting the behavior of thermodynamic systems across various disciplines.

Physical Properties

Physical properties are intrinsic characteristics of a substance that can be observed or measured without altering its chemical identity. These properties are fundamental in understanding the behavior and interactions of materials, and they play a crucial role in defining state functions. State functions, such as internal energy, enthalpy, and entropy, depend on the physical properties of a system. For instance, the internal energy of a system is influenced by its temperature, volume, and the number of moles of its components. Temperature, a key physical property, is a measure of the average kinetic energy of particles in a substance and directly affects the internal energy. Volume, another critical physical property, is the amount of space occupied by a substance. It is essential in calculating the pressure of a gas according to the ideal gas law (PV = nRT), where pressure (P), volume (V), and temperature (T) are interrelated. The density of a substance, which is mass per unit volume, also falls under physical properties and is crucial for understanding buoyancy and other phenomena. Specific heat capacity, which describes how much heat energy is required to raise the temperature of a unit mass of a substance by one degree Celsius, is another important physical property that influences the enthalpy changes in a system. Thermal conductivity and viscosity are additional physical properties that impact how substances respond to heat and flow. Thermal conductivity measures how efficiently heat is transferred through a material, while viscosity quantifies the resistance of a fluid to flow. These properties are vital in engineering applications where materials are chosen based on their ability to conduct heat or resist flow under various conditions. In the context of state functions, understanding these physical properties allows for precise calculations and predictions. For example, knowing the specific heat capacity and initial temperature of a substance enables the calculation of the change in internal energy when it undergoes heating or cooling. Similarly, knowing the volume and pressure of a gas system helps in determining its enthalpy changes during processes like expansion or compression. Moreover, physical properties are often used as indicators of phase transitions—changes from one state of matter to another—which are critical in understanding state functions. For instance, melting point and boiling point are physical properties that define the temperatures at which a substance transitions between solid, liquid, and gas phases. These transitions involve significant changes in state functions such as entropy and enthalpy. In summary, physical properties such as temperature, volume, density, specific heat capacity, thermal conductivity, and viscosity are essential components in defining and understanding state functions. They provide the quantitative basis for calculating changes in internal energy, enthalpy, and entropy during various physical processes. By measuring and analyzing these properties accurately, scientists and engineers can predict and control the behavior of materials under different conditions, making them indispensable tools in both theoretical and practical applications.

Thermodynamic Context

In the thermodynamic context, state functions are fundamental concepts that describe the properties of a system in a way that is independent of the path taken to reach a particular state. These functions are crucial because they allow us to predict and analyze the behavior of thermodynamic systems without needing to know the specific details of how the system evolved from one state to another. The definition and concept of state functions hinge on their ability to be defined solely by the initial and final states of the system, making them path-independent. One of the key state functions is internal energy (U), which represents the total energy within a system, including both kinetic energy of molecules and potential energy associated with molecular interactions. Another important state function is enthalpy (H), defined as the sum of internal energy and the product of pressure and volume (H = U + PV). Enthalpy is particularly useful in describing processes at constant pressure, such as those occurring in open systems where matter can flow in and out. Entropy (S) is another critical state function that quantifies disorder or randomness in a system. It is a measure of how much thermal energy is unavailable to do work in a system. The second law of thermodynamics states that the total entropy of an isolated system will always increase over time, reflecting the natural tendency towards greater disorder. Gibbs free energy (G) is another vital state function, especially in chemical reactions. It combines enthalpy, entropy, and temperature to predict whether a reaction will be spontaneous under given conditions (ΔG < 0 indicates spontaneity). This makes Gibbs free energy an indispensable tool for chemists and engineers seeking to understand reaction feasibility. The concept of state functions also extends to other properties like volume (V) and temperature (T), which are intrinsic to the system's state regardless of how it was achieved. These properties can be measured directly or calculated using various thermodynamic relations. Understanding state functions is essential for predicting and controlling various processes in fields ranging from chemical engineering to materials science. For instance, in designing industrial processes, engineers rely on state functions to optimize conditions such as temperature and pressure to achieve desired outcomes efficiently. In materials science, knowing how state functions change with different conditions helps researchers develop new materials with specific properties. In summary, state functions provide a robust framework for analyzing and predicting the behavior of thermodynamic systems by focusing on the intrinsic properties that define their states. This path-independent nature makes them invaluable tools for scientists and engineers working across diverse fields where thermodynamics plays a critical role. By mastering these concepts, professionals can better design, optimize, and predict the outcomes of various processes, driving innovation and efficiency in their respective fields.

Characteristics and Properties of State Functions

State functions are fundamental concepts in thermodynamics, characterized by their unique properties that make them indispensable for understanding and analyzing various physical processes. These functions are distinguished by three key characteristics: path independence, reversibility, and adherence to conservation laws. Path independence signifies that the value of a state function depends solely on the initial and final states of a system, regardless of the specific path taken to reach those states. Reversibility implies that state functions can return to their original values if the system undergoes a reversible process, highlighting the importance of equilibrium conditions. Conservation laws ensure that certain quantities remain constant over time, aligning with the principles of energy and mass conservation. Understanding these properties is crucial for predicting and interpreting thermodynamic phenomena accurately. By delving into these aspects, we can gain a deeper insight into how state functions operate and their significance in thermodynamic analysis. Let us begin by exploring the concept of path independence, which forms the cornerstone of state functions' behavior.

Path Independence

Path independence is a fundamental characteristic of state functions, which distinguishes them from path-dependent functions. In thermodynamics, a state function is a property whose value depends only on the current state of the system, not on the path by which the system reached that state. This means that if you start at one point and end at another, the change in a state function will be the same regardless of the specific route taken. To illustrate this concept, consider the example of altitude. If you start at sea level and climb to the top of a mountain, your final altitude will be the same whether you took a direct route or a more circuitous one. Altitude is a state function because its value is determined solely by your current position, not by how you got there. In contrast, the distance traveled would be path-dependent; it would vary significantly depending on whether you took a straight path or a winding one. This property of path independence makes state functions particularly useful in thermodynamic calculations. For instance, when calculating changes in internal energy (a state function), you only need to know the initial and final states of the system. The specific process or path followed to reach the final state does not affect the result. This simplifies many thermodynamic problems, as it allows you to focus solely on the initial and final conditions without worrying about intermediate steps. Another key state function that exhibits path independence is entropy. The change in entropy of a system is determined by its initial and final states, not by the specific process that occurred. This is crucial in understanding the second law of thermodynamics, which states that the total entropy of an isolated system will always increase over time. Since entropy is path-independent, it provides a clear and consistent measure of disorder or randomness that can be used to predict the direction of spontaneous processes. In practical terms, path independence also underpins many engineering applications. For example, in designing a heat engine or refrigeration system, engineers rely on state functions like internal energy and entropy to predict performance and efficiency. Because these properties are path-independent, engineers can analyze different configurations and processes without needing to account for every detail of how the system transitions between states. In summary, path independence is a defining feature of state functions that makes them invaluable tools in thermodynamics. By ensuring that changes in these properties depend only on the initial and final states of a system, path independence simplifies calculations, enhances predictability, and provides a robust framework for understanding complex thermodynamic phenomena. This characteristic underscores why state functions are so central to both theoretical and practical applications in fields ranging from chemistry to engineering.

Reversibility

Reversibility is a fundamental concept in the context of state functions, which are properties of a system that depend only on the current state of the system, not on the path taken to reach that state. In thermodynamics, reversibility refers to a process that can be reversed without any residual effects on the system or its surroundings. This means that if a reversible process is carried out in one direction and then reversed, the system and its environment will return to their initial states. For instance, in an ideal gas expansion, if the gas is expanded slowly and isothermally (at constant temperature), it can be compressed back to its original volume without any loss of energy, illustrating a reversible process. The reversibility of a process is closely tied to the concept of equilibrium. In a reversible process, the system remains in equilibrium with its surroundings at all times. This equilibrium ensures that the system's state variables, such as temperature, pressure, and volume, change infinitesimally and reversibly. For example, during a reversible isothermal expansion of an ideal gas, the temperature of the gas remains constant because it is in thermal equilibrium with its surroundings. Reversibility is crucial for understanding state functions because it allows us to define these functions precisely. State functions like internal energy (U), enthalpy (H), and entropy (S) are path-independent, meaning their values depend solely on the initial and final states of the system. In reversible processes, these state functions can be calculated using well-defined equations that relate them to other measurable properties of the system. For instance, the change in internal energy (ΔU) during a reversible process can be determined using the first law of thermodynamics: ΔU = Q - W, where Q is the heat added to the system and W is the work done by the system. However, real-world processes are often irreversible due to factors such as friction, heat transfer across finite temperature differences, and chemical reactions that proceed in one direction. Irreversible processes lead to energy losses and cannot be reversed without external intervention. Despite this, understanding reversible processes provides a theoretical framework that helps in analyzing and predicting the behavior of real systems under ideal conditions. In summary, reversibility is a key characteristic that underpins the definition and calculation of state functions. It ensures that these functions are well-defined and path-independent, allowing for precise thermodynamic analysis. While real processes may not be perfectly reversible, the concept remains essential for understanding and predicting the behavior of thermodynamic systems in various contexts.

Conservation Laws

Conservation laws are fundamental principles in physics that underpin the concept of state functions, ensuring that certain physical quantities remain constant over time within a closed system. These laws are crucial for understanding the behavior and evolution of physical systems, as they provide a framework for predicting and analyzing changes in energy, momentum, angular momentum, and other key properties. The law of conservation of energy, for instance, states that the total energy of an isolated system remains constant; it can neither be created nor destroyed, only transformed from one form to another. This principle is essential for defining state functions like internal energy, which depends solely on the current state of the system and not on the path taken to reach that state. Similarly, the conservation of momentum and angular momentum ensures that these quantities remain invariant unless acted upon by external forces or torques, respectively. These conservation laws are inherently linked to the concept of state functions because they guarantee that certain properties of a system are path-independent and depend only on the initial and final states. This path independence is a defining characteristic of state functions, making them invaluable tools in thermodynamics and other branches of physics for calculating changes in system properties without needing to know the detailed history of the process. By adhering to these conservation principles, scientists can predict and analyze complex phenomena with greater accuracy, reinforcing the robustness and reliability of state functions in describing physical systems. In essence, conservation laws provide the theoretical foundation upon which state functions are built, ensuring that these functions accurately reflect the intrinsic properties of physical systems and their transformations over time.

Examples and Applications of State Functions

State functions are fundamental concepts in thermodynamics, providing a framework to understand and analyze various physical and chemical processes. These functions are crucial because they depend only on the current state of a system, not on the path taken to reach that state. In this article, we will delve into three key examples of state functions: Internal Energy, Enthalpy, and Entropy. Each of these functions offers unique insights into different aspects of thermodynamic systems. Internal Energy, for instance, quantifies the total energy within a system, encompassing both kinetic and potential energies. Enthalpy, on the other hand, accounts for the total energy of a system including the energy associated with the pressure and volume of a system. Entropy, a measure of disorder or randomness, is vital for understanding the direction of spontaneous processes. By exploring these state functions in detail, we can better comprehend how energy is transformed and conserved in various applications. Let us begin by examining Internal Energy, which serves as a foundational concept in understanding the energetic state of any thermodynamic system.

Internal Energy

Internal energy, a fundamental concept in thermodynamics, is a state function that encapsulates the total energy of a system. It includes both the kinetic energy of the particles and the potential energy associated with their interactions. Unlike path-dependent functions such as work and heat, internal energy is a property of the system's state, meaning its value depends only on the current conditions of the system and not on how those conditions were achieved. This characteristic makes internal energy a powerful tool for analyzing and predicting the behavior of thermodynamic systems. To illustrate its significance, consider a simple example: heating water from room temperature to boiling point. The internal energy of the water increases as it absorbs heat energy, regardless of whether this heat is transferred through direct heating or by mixing it with hotter water. This increase in internal energy reflects both the rise in kinetic energy of the water molecules (as they move faster) and any changes in potential energy due to molecular interactions. The process can be visualized using an ideal gas model, where internal energy is directly proportional to temperature for an ideal gas, highlighting its role as a state function. In practical applications, understanding internal energy is crucial for designing efficient systems. For instance, in power plants, engineers aim to maximize the conversion of chemical energy stored in fuels into useful work while minimizing losses. By tracking changes in internal energy, they can optimize combustion processes and heat transfer mechanisms to achieve higher efficiencies. Similarly, in refrigeration systems, controlling internal energy helps maintain desired temperatures by managing heat absorption and release. Another critical application lies in chemical reactions. During a reaction, the internal energy change (\(\Delta U\)) is a key indicator of whether the reaction is exothermic (releasing energy) or endothermic (absorbing energy). This information is vital for predicting reaction spontaneity and stability. For example, in the Haber process for ammonia synthesis, understanding the internal energy changes helps optimize reaction conditions such as pressure and temperature to favor the desired products. Furthermore, internal energy plays a pivotal role in materials science. The study of phase transitions—such as melting or boiling—relies heavily on understanding how internal energy changes with temperature and pressure. These transitions are critical in manufacturing processes where precise control over material properties is essential. For instance, in the production of metals, knowing how internal energy affects crystal structure and phase stability allows for the creation of materials with specific mechanical properties. In summary, internal energy is a cornerstone of thermodynamic analysis, offering insights into the fundamental properties of systems. Its status as a state function makes it an invaluable tool for predicting and optimizing various processes across diverse fields, from power generation and refrigeration to chemical synthesis and materials science. By understanding and manipulating internal energy, scientists and engineers can develop more efficient technologies that transform raw materials into useful products while minimizing energy waste. This underscores the importance of internal energy as a foundational concept in the study of state functions.

Enthalpy

Enthalpy, denoted by the symbol \( H \), is a crucial state function in thermodynamics that quantifies the total energy of a system, encompassing both internal energy and the energy associated with the pressure and volume of a system. It is defined as \( H = U + pV \), where \( U \) is the internal energy, \( p \) is the pressure, and \( V \) is the volume. This state function is particularly useful because it simplifies the analysis of many thermodynamic processes, especially those occurring at constant pressure, which is common in everyday life and industrial applications. One of the key reasons enthalpy is so valuable is its ability to account for the heat transfer during processes where the system's pressure remains constant. For instance, in chemical reactions conducted in open vessels or in processes involving phase changes like boiling or condensation, enthalpy changes directly relate to the heat absorbed or released. This makes it an essential tool for chemists and engineers who need to predict and measure energy changes in various reactions and processes. Enthalpy's practical applications are widespread. In chemistry, enthalpy changes (\( \Delta H \)) are used to determine whether a reaction is exothermic (releases heat) or endothermic (absorbs heat). For example, the combustion of fuels like gasoline or natural gas involves significant enthalpy changes, which are critical for understanding the efficiency and safety of these processes. In industrial settings, such as power plants and chemical manufacturing facilities, accurate calculations of enthalpy are necessary for designing efficient systems and ensuring safe operations. Moreover, enthalpy plays a vital role in food science and technology. The enthalpy of fusion and vaporization are crucial parameters in understanding the freezing and boiling points of foods, which are essential for food preservation techniques like freezing and canning. In biomedical engineering, enthalpy changes are relevant in studying metabolic processes and understanding how living organisms manage energy. In environmental science, enthalpy is used to study climate phenomena such as the formation of clouds and precipitation. The latent heat of vaporization, a component of enthalpy, is key to understanding how water cycles through the atmosphere and affects weather patterns. This knowledge is vital for predicting weather and climate changes. Furthermore, enthalpy is integral to the design of HVAC systems (heating, ventilation, and air conditioning) where it helps in calculating the energy required to heat or cool buildings efficiently. In aerospace engineering, accurate calculations of enthalpy are necessary for designing propulsion systems and understanding the thermodynamic behavior of gases during flight. In summary, enthalpy is a fundamental state function that provides a comprehensive measure of a system's energy, making it indispensable across various fields. Its applications span from chemical reactions and industrial processes to food science, biomedical engineering, environmental studies, and more. By understanding and applying enthalpy, scientists and engineers can optimize processes, ensure safety, and develop more efficient technologies.

Entropy

Entropy, a fundamental concept in thermodynamics, is a state function that quantifies the disorder or randomness of a system. It is a measure of the amount of thermal energy unavailable to do work in a system. Entropy is denoted by the symbol \(S\) and is typically measured in units of joules per kelvin (J/K). The second law of thermodynamics states that the total entropy of an isolated system will always increase over time, reflecting the natural tendency towards greater disorder. In practical terms, entropy can be observed in various everyday phenomena. For instance, when you pour hot coffee into a cold cup, the heat from the coffee disperses throughout the cup and surrounding air, increasing the overall entropy of the system. Similarly, in a chemical reaction, reactants often transform into products with higher entropy due to increased molecular motion and disorder. This principle underpins many industrial processes, such as power generation in thermal power plants where high-temperature steam expands through turbines to produce electricity, with entropy increasing as heat is transferred from the steam to the surroundings. Entropy also plays a crucial role in biological systems. Living organisms maintain their highly organized structures by importing energy and matter from their environment, thereby decreasing their local entropy at the expense of increasing the entropy of their surroundings. This balance is essential for life processes such as metabolism and cellular organization. In information theory, entropy has an analogous meaning where it measures the uncertainty or randomness of information. Claude Shannon introduced this concept to quantify the amount of information in a message, which has since been applied in fields like data compression and cryptography. From an engineering perspective, understanding entropy is vital for designing efficient systems. In refrigeration systems, for example, entropy changes are critical for determining the minimum work required to transfer heat from one location to another. Similarly, in aerospace engineering, managing entropy is crucial for optimizing engine performance and ensuring efficient energy conversion. The concept of entropy extends beyond physical systems to economic and social contexts as well. Economic entropy can be seen as a measure of economic disorder or inefficiency within an economy. High economic entropy might indicate wasteful resource allocation or inefficient market processes. In conclusion, entropy is a versatile state function that encapsulates the idea of disorder or randomness across various domains—from thermodynamic systems to information theory and even economic analysis. Its applications are diverse and profound, influencing how we understand and manage energy, information, and resources in our daily lives and technological endeavors. By grasping the principles of entropy, we can better design systems that maximize efficiency and minimize waste, aligning with the broader goals of sustainability and resource optimization.